Project information

- Category: Computer Vision

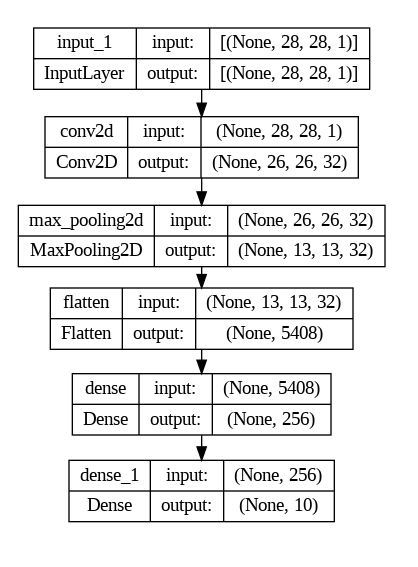

- Skills Used: Python, TensorFlow, SHAP, CNN

- Find notebook here: GitHub: Explaining CNN with Shapley Values

Summary:

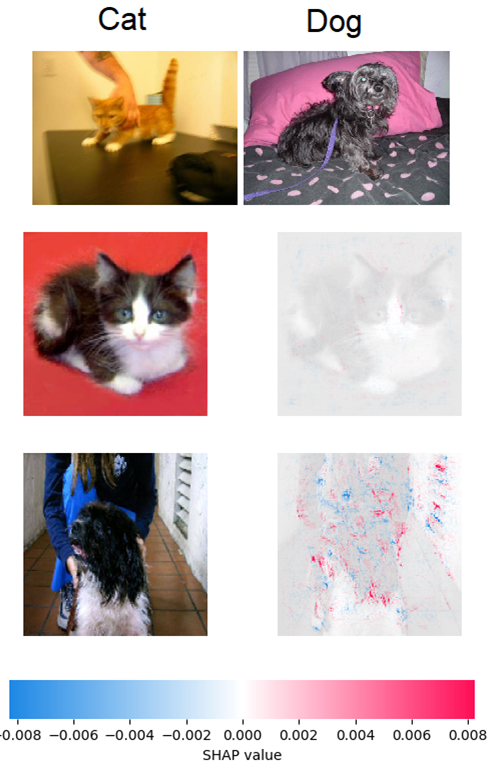

In this project we used Shapley values, a method from cooperative game theory, to explain the predictions of machine learning models. Shapley values are a model-agnostic way of attributing the contribution of each input feature to the model output, based on the marginal effect of adding or removing that feature from the input set. Shapley values have some desirable properties, such as:

- Efficiency: The sum of the Shapley values for all features equals the model output.

- Symmetry: If two features have the same impact on the model output, they have the same Shapley value.

- Dummy: If a feature has no impact on the model output, its Shapley value is zero.

- Additivity: The Shapley value of a feature for a complex model is the sum of the Shapley values for that feature for simpler models.